Identification before estimation

A critical step in causal inference that many analysis projects miss

Many important questions in business and life are causal questions; Which initiative should we invest in? Is this investment worth it? What impact did this individual have last year? Which candidate should we hire? Even existential questions like, What is the purpose of life? are grounded in causality.

Unfortunately, analysts often rush into statistical estimation without confirming whether they can even credibly answer a causal question1. This is the role of causal identification.

Identification2 is the process of determining whether a specific parameter (e.g. the effect of A on B) can be adequately estimated given the available data and assumptions. It tells us, before we analyze the data, whether we have a clear path to estimate the causal effect we care about.

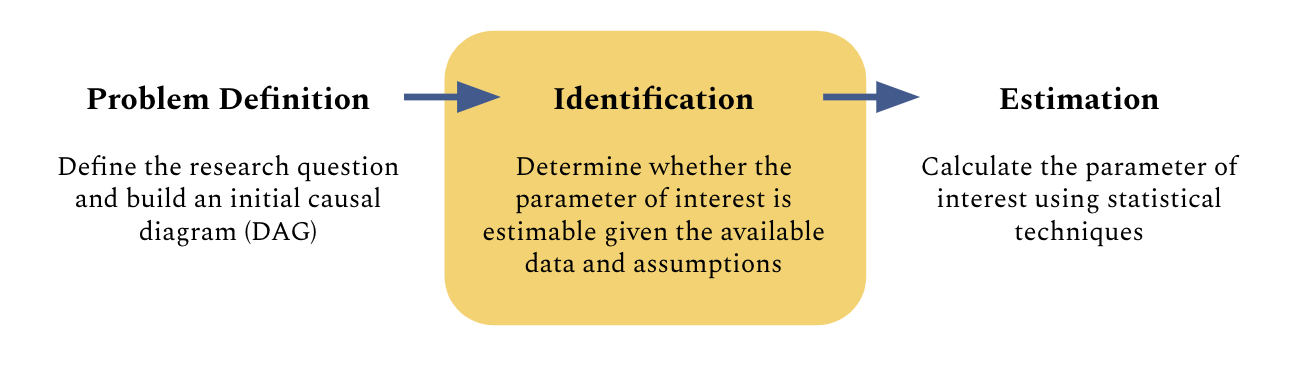

Here’s how it fits into the analysis workflow:

By requiring us to state and confront our assumptions, identification provides a level of clarify and scrutiny not provided by estimation alone.

Why Identification matters

When we want something to be true, we can often convince ourselves that it is (or at least forget that it might not be). Causal identification helps us short-circuit this bias when estimating the effect of something on something else.

Unfortunately, it doesn’t seem like identification has been emphasized enough in education or the workplace to become part of the normal analysis workflow3. This is an issue because inadequate identification results in:

Wasted effort on unanswerable questions

Biased or invalid claims

My critique is not about faulty estimation methods. It’s about inadequate identification4. No estimation procedure can compensate for an identification failure. Just because we have statistical validity5 doesn’t mean we have internal validity6, let alone external validity7.

Identification: A (brief) how-to

Unlike estimation, identification generally does not require deep knowledge of statistical techniques. It can be conducted by anyone with strong logical faculties, domain expertise relevant to the research question, and familiarity with causal inference principles.

1. Pre-work

Start by defining the research question and sketching an initial causal diagram (also known as a DAG or directed acyclic graph) based on what you know about the data generating process (i.e. how the system works). This DAG encodes assumptions about the relationships between variables, which makes identification possible.

Here’s an example of a DAG:

2. Refine the DAG

Make sure the DAG is complete and defensible by seeking additional expert input, reviewing existing research, and conducting exploratory data analysis to identify omitted variables. Continue iterating until the DAG is sufficiently defensible.

3. Check for identifiability

Apply causal inference rules8 to determine whether you can credibly estimate the parameter of interest. You are trying to determine whether the treatment (A) is independent of the outcome (B) after controlling for certain variables.

Sometimes these rules indicate that you can’t estimate the parameter of interest. That’s OK. You have a few options:

Change the parameter of interest: You might not be able to estimate the effect of A on B, but there may be other useful parameters that you can estimate

Capture the necessary data: If unmeasured variables are creating bias, it may be economical to measure them

Identify another model: There may be other models whose assumptions are more defensible (e.g. you might look for a good instrumental variable)

4. Proceed with estimation

If the parameter is identifiable, select your estimation method(s) based on the assumptions in your DAG (e.g. backdoor or frontdoor adjustment, instrumental variables, regression discontinuity, difference-in-differences, and more9).

For the non-analysts

If you don’t have deep statistical knowledge, understanding identification will help you apply your domain expertise and critical thinking to improve the quality of analysis projects. Ask questions like:

What assumptions are needed for these claims to be true?

Make sure you understand and agree with the assumptions. Inaccurate assumptions can result in inaccurate conclusions.

How sensitive are the findings to these assumptions? What happens if they aren’t true?

If the analyst doesn’t know, suggest they run a sensitivity analysis to see how much a particular assumption influences the results. If a given assumption has a lot of leverage, it may justify closer scrutiny.

Can I see the DAG?

If they haven’t built a DAG, encourage them to do so. This is a great way to communicate the key assumptions that the conclusions depend on.

Consider what might be missing from the graph. Unobserved confounders and reverse causation are often unintentionally excluded, and can significantly impact the validity of the analysis.

What have we done to falsify these assumptions?

There are a number of ways an analyst can test their assumptions. For the important assumptions, make sure they have made a serious effort to falsify them. If they need guidance, you can point them to Causal Inference: The Mixtape, which outlines key assumptions and tests for various estimation approaches.

For the skeptics

If anything in this post seems wrong to you, let me know! I’m quick to admit that I have much to learn in this domain. I’m also skeptical of anyone who tells me I’m wrong without a good reason.

If you haven’t yet, I highly recommend reading some of the popular literature on causal inference. I got started with The Book of Why by Judea Pearl, which was a great starting point.

For a hands-on exercise, consider reverse engineering a DAG10 based on a recent analysis project. Ask yourself the following:

Does the implied DAG match your understanding of how the world works?

Does it exclude any unobserved confounders?

Is there a possibility of reverse causation?

Update your DAG based on these insights and test whether the original conclusions still hold. You can also run a sensitivity analysis on your newly uncovered assumptions to determine whether they would have a meaningful impact on your conclusions11.

The typical offender in CX is the widespread use of regression results to call things “key drivers” that might not be causal, and to make claims like “[x]% increase in [CX score] results in [y]% improvement in [customer behavior].”

Identification can be applied to different aspects of an analysis, such as parameter identification, statistical identification, and structural identification. In this article, I’m focused on causal identification.

I’m not sure why this is the case. It may be that advances in causal inference have not yet become mainstream. It may also stem from the data science community’s historical focus on prediction problems rather than causation. Further, business environments tend to value quick insights over discipline and rigor. There also remains a widespread misinterpretation of statistics like p-values.

I have received surprising responses over the years from experienced analysts to my critiques about the identifiability of their CX-related research questions. They seemed to think I was questioning their technical methods, when I was actually critiquing whether they could make credible causal inferences given their data and assumptions.

Statistical validity: Whether we have used the proper statistical techniques and interpreted the results accurately

Internal validity: Whether the results reflect a true causal relationship, rather than a spurious causation

External validity: How well the results can be generalized to other environments, customer groups, time periods, etc.

A walkthrough of these rules falls outside the scope of this post. If they interest you, you might start here with d-separation rules. Even if you aren’t a statistician, I think you’ll find them to be accessible.

A description of these methods falls outside the scope of this post. If they interest you, check out Causal Inference: The Mixtape by Scott Cunningham for a great list.

dagitty.net offers a free, easy-to-use, web-based tool to build directed acyclic graphs.

Yes, the title of this post is a Brandon Sanderson reference. I just finished Wind and Truth.